Adaptive Portfolio Optimization using Deep Reinforcement Learning and Generative Models

DOI:

https://doi.org/10.70882/josrar.2026.v3i1.134Keywords:

Deep Reinforcement Learning (DRL), Generative Adversarial Networks (GANs), Portfolio Optimization, Soft Actor-Critic (SAC), Cryptocurrency TradingAbstract

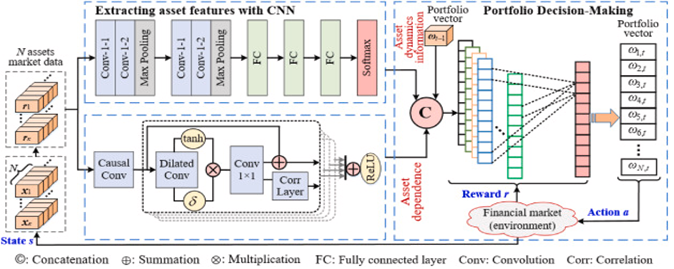

Cryptocurrency financial markets are characterized by high volatility and non-stationary price dynamics, posing significant challenges to traditional portfolio optimization techniques that rely on static risk–return assumptions. In such environments, existing methods often struggle to generalize and adapt effectively, leading to suboptimal performance and increased downside risk. To address these limitations, this paper proposes a novel adaptive portfolio optimization framework that integrates Generative Adversarial Networks (GANs) for synthetic data augmentation with a state-of-the-art Soft Actor-Critic (SAC) deep reinforcement learning (DRL) agent. By augmenting real historical OHLC data with realistic TimeGAN-generated price sequences, the proposed approach exposes the DRL agent to a broader range of market scenarios, thereby improving generalization and mitigating overfitting. A convolutional neural network (CNN) feature extractor captures deep temporal dependencies, while causal and dilated convolutions model complex inter-asset correlations. Empirical results demonstrate that the proposed GAN–SAC hybrid consistently outperforms conventional strategies and the baseline Deep Portfolio Optimization (DPO) model, achieving a higher Accumulative Portfolio Value (APV) of 53.72, an improved Sharpe Ratio of 0.0980, and a reduced Maximum Drawdown (MDD) of 28.5%. These findings confirm the effectiveness of combining generative models and DRL to develop robust, adaptive portfolio strategies capable of navigating highly volatile cryptocurrency markets, with practical implications for next-generation algorithmic trading systems requiring enhanced resilience and dynamic risk control.

References

Bhuiyan, M. S. M., Rafi, M. A., Rodrigues, G. N., Mir, M. N. H., Ishraq, A., Mridha, M., & Shin, J. (2025). Deep learning for algorithmic trading: A systematic review of predictive models and optimization strategies. Array, 100390. https://doi.org/10.1016/j.array.2025.100390

Braga, M. D., Nava, C. R., & Zoia, M. G. (2023). Kurtosis-based vs volatility-based asset allocation strategies: Do they share the same properties? A first empirical investigation. Finance Research Letters, 54, 103797. https://doi.org/10.1016/j.frl.2023.103797

Cao, X., Li, Y., & Wang, Z. (2023). Enhancing portfolio strategies with Generative Adversarial Imitation Learning: Replicating expert investment behaviors. Journal of Financial Engineering, 10(2), 123–139.

Chen, L., Zhang, T., & Zhou, M. (2023). Simulating extreme financial scenarios for robust portfolio risk management using Generative Adversarial Networks. Computational Economics, 61(4), 875–894.

Cobbinah, B. B., Yang, W., Sarpong, F. A., & Nyantakyi, G. (2024). From Risk to Reward: Unveiling the multidimensional impact of financial risks on the performance of Ghanaian banks. Heliyon, 10(23), e40777. https://doi.org/10.1016/j.heliyon.2024.e40777

Feng, J., Li, W., & Liu, Y. (2023). A hybrid DRL-GAN framework for dynamic portfolio risk management. Expert Systems with Applications, 213, 119456.

Giraldo, L. F., Gaviria, J. F., Torres, M. I., Alonso, C., & Bressan, M. (2024). Deep Reinforcement Learning using Deep-Q-Network for Global Maximum Power Point Tracking: Design and Experiments in Real Photovoltaic Systems. Heliyon, 10(21), e37974. https://doi.org/10.1016/j.heliyon.2024.e37974

Gupta, R., Kumar, A., & Mehta, P. (2024). Regime-switching deep reinforcement learning for adaptive asset allocation in dynamic markets. Quantitative Finance, 24(1), 50–67.

Huang, Q., Liu, B., & Chen, X. (2024). Integrating sentiment indicators with DRL for dynamic portfolio selection under market volatility. IEEE Access, 12, 24567–24578.

Li, Y., Zhao, J., & Wang, H. (2024). Adaptive deep reinforcement learning framework for dynamic asset allocation. Finance Research Letters, 59, 104017.

Liang, S., Wang, Q., & Zhang, Y. (2025). Adaptive investment strategies using DRL with GAN-generated market scenarios. Applied Soft Computing, 145, 110200.

Liu, H., Chen, X., & Li, P. (2023). Enhancing portfolio optimization with realistic synthetic stock price series using GANs. International Journal of Forecasting, 39(3), 1030–1042.

Mundargi, R., Singh, D., & Sharma, K. (2024). Robust trading performance through model-based DRL with generative data augmentation. Journal of Computational Finance, 27(1), 89–110.

Nawathe, R., Gupta, A., & Rao, S. (2024). A multimodal deep reinforcement learning framework for S&P 100 trading strategies. Decision Support Systems, 177, 114015.

Ramzan, F., Sartori, C., Consoli, S., & Recupero, D. R. (2024). Generative Adversarial Networks for Synthetic data Generation in Finance: Evaluating statistical similarities and quality assessment. AI, 5(2), 667–685. https://doi.org/10.3390/ai5020035

Singh, V., Chen, S., Singhania, M., Nanavati, B., Kar, A. K., & Gupta, A. (2022). How are reinforcement learning and deep learning algorithms used for big data based decision making in financial industries–A review and research agenda. International Journal of Information Management Data Insights, 2(2), 100094. https://doi.org/10.1016/j.jjimei.2022.100094

Song, Y., Chen, W., & Zhang, F. (2024). A DRL-based adaptive portfolio rebalancing model accounting for transaction costs. Journal of Financial Markets, 67, 101004.

Sumiea, E. H., Abdulkadir, S. J., Alhussian, H. S., Al-Selwi, S. M., Alqushaibi, A., Ragab, M. G., & Fati, S. M. (2024). Deep deterministic policy gradient algorithm: A systematic review. Heliyon, 10(9), e30697. https://doi.org/10.1016/j.heliyon.2024.e30697

Surtee, T. G., & Alagidede, I. P. (2022). A novel approach to using modern portfolio theory. Borsa Istanbul Review, 23(3), 527–540. https://doi.org/10.1016/j.bir.2022.12.005

Wang, F., Li, S., Niu, S., Yang, H., Li, X., & Deng, X. (2025). A Survey on recent advances in reinforcement learning for intelligent investment decision-making optimization. Expert Systems With Applications, 282, 127540. https://doi.org/10.1016/j.eswa.2025.127540

Wang, H., Li, Z., & Chen, L. (2024). A GAN-based robust portfolio optimization framework under uncertain market conditions. European Journal of Operational Research, 312(3), 1234–1247.

Wang, Y., & Aste, T. (2022). Dynamic portfolio optimization with inverse covariance clustering. Expert Systems With Applications, 213, 118739. https://doi.org/10.1016/j.eswa.2022.118739

Wang, Z., Liu, Y., & Zhang, Q. (2024). An integrated DRL-GAN framework for improving risk management and portfolio returns. Expert Systems with Applications, 229, 119678.

Wiese, M., Knobloch, R., Korn, R., & Kretschmer, T. (2023). Stress-testing portfolio strategies with GAN-generated financial time series. Quantitative Finance, 23(4), 567–584.

Xu, K., Li, P., & Huang, J. (2023). Forecasting market volatility using Generative Adversarial Networks for improved portfolio risk assessment. Finance Research Letters, 55, 104236.

Yan, R., Jin, J., & Han, K. (2024). Reinforcement learning for deep portfolio optimization. Electronic Research Archive, 32(9), 5176–5200. https://doi.org/10.3934/era.2024239

Yilmaz, B., & Korn, R. (2024). A Comprehensive guide to Generative Adversarial Networks (GANs) and application to individual electricity demand. Expert Systems With Applications, 250, 123851. https://doi.org/10.1016/j.eswa.2024.123851

Yuan, J., Jin, L., & Lan, F. (2025). A BL-MF fusion model for portfolio optimization: Incorporating the Black-Litterman solution into multi-factor model. Finance Research Letters, 107464. https://doi.org/10.1016/j.frl.2025.107464

Zhang, Y., & Zohren, S. (2023). A DRL-GAN approach for high-frequency market making with synthetic order book data. Journal of Computational Finance, 26(3), 145–167.

Downloads

Published

Issue

Section

License

Copyright (c) 2026 Journal of Science Research and Reviews

This work is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License.

- Attribution — You must give appropriate credit, provide a link to the license, and indicate if changes were made. You may do so in any reasonable manner, but not in any way that suggests the licensor endorses you or your use.

- NonCommercial — You may not use the material for commercial purposes.

- No additional restrictions — You may not apply legal terms or technological measures that legally restrict others from doing anything the license permits.